ASCOM Standard (Provisional) for controlling a Video Camera

ASCOM IVideo Discussion

Before you start

Many people have asked a question similar to this: "Why do you build another interface for video when there is already DirectShow?". This web page will answer you this question in very detail and you should read everything on it. However if you want a quick answer you can check this quick FAQ about IVideo and DirectShow.What Is Astronomical Video?

The word 'video' means different things to different people. For some perhaps video is about making holiday video clips of their family using their own camcorder; for others video is scientific data saved to a file that can be replayed later many times in order to extract useful information; video could also be defined as watching moving pictures on a TV, computer or other screen. Before we get down to the reasoning behind the proposed IVideo interface it is really important to put 'video' and 'astronomy' together and look at what 'astronomical video' actually means for the people that use it. For this purpose I have divided the potential users into groups and will go through each of the groups and try to identify what is important for that group.Astronomical Video for 'Deep Sky Imagers'

The Deep Sky Imagers use astronomical video as a convenient way to display images of deep sky objects (or other astronomical objects) on a TV or a computer screen to enjoy them and share them with others. This is indeed a great way to show the stunningly beautiful objects on the sky to friends, to piers at star parties or even to broadcast them on the internet to anyone interested. Usually the Deep Sky Imagers use their very sensitive video cameras to take long exposures of up to several seconds and display them as a video signal on a screen. Each exposure is displayed on the screen, for the duration of the exposure, using a standard analogue TV frame rate of 29.97 (NTCS) or 25 (PAL) frames per second . Some times the Deep Sky Imagers also record a video file and later stack many frames from it to produce impressive still pictures of the deep sky objects.What is important for the Deep Sky Imagers is to be able to adjust all settings of their video cameras in order to get the best shots and they certainly want to achieve a very good focus. Once everything has been setup the Deep Sky Imagers would like to either show the video on an a separate TV screen, show it on the computer screen, broadcast it on internet or sometimes to record it to a video file. Why the Deep Sky Imagers may be interested in an ASCOM interface? Mostly because they want to be able to control their video cameras remotely and from a computer.

Astronomical Video for 'Planetary Imagers'

Planetary Imagers use a video camera to record video of planets in the Solar System. Then later using stacking and/or lucky imaging techniques they manage to produce stunning high detail images of the planets in the Solar System. Some of the Planetary Imagers are led by their desire to acquire beautiful high resolution images of the planets, their satellites and eclipses or occultaions of the satellites and the planet. Others are monitoring the slow changes of the storms on the surfaces of Jupiter or Saturn and are even searching and finding impacts by asteroids or comets with Jupiter.Some Planetary Imagers use web cameras while others want to improve their odds against the turbulence and use digital video cameras operating at high speeds (e.g. 100 frames per second or more). Planetary Imagers always need to record a video file and displaying the video frames on the screen is mostly important for targeting, focusing and camera adjustments. However good focusing is very important as well as good control of the video camera settings. Planetary Imagers may be interested in an ASCOM interface because they like working remotely and sometimes in unattended mode.

Astronomical Video for 'Occultationists'

Occultationists use video to time events such as Lunar or asteroidal occultations of stars, time Baily Beads during solar eclipses, time rare events such as transits of planets or time any other astronomical event where determining the time of the event is a useful observation. Each video frame in those observations has an associated high precision timestamp - usually made by a GPS and usually accurate to 0.001 second. The timestamp may be either embedded in the image as "on screen display" or can be encoded in the pixels (as some digital video cameras do) or can be part of the frame raw data (e.g. recorded as the status video channel by the Astronomical Digital Video System (ADVS) or part of the FITS metadata). For Occultationists timing and photometric accuracy are very important. To increase photometric accuracy Occultationists would be very happy to use digital video cameras that produce images with more than 8 bits. Occultationists also typically use a range of different video cameras as they sometimes go to observe at a mobile site while also observing unattended from home. While they usually observe the live event on the screen the Occultationists always need to record a video file with the embedded frame timestamps for their observations to be useful. Why the Occultationists may be interested in using the ASCOM interface? Typically to automate the observations at their home observatory and observe fully unattended which would require finding and focusing the target as part of the process.Astronomical Video for 'Video Astrometrists'

Video Astrometrists use video to measure the precise positions of celestial objects - typically fast moving Near Earth Asteroids or bright comets. Timing accuracy is quite important - particularly for fast moving objects - and video frames are always timestamped by a GPS or other highly accurate time source. A wide range of exposures can be used from 20fps for bright and very fast moving NEAs to a couple of seconds for fainter comets. Video Astrometrists always record a video file during their observations so they can measure the positions of the observed objects later. The field is viewed on the screen for pointing and focusing purposes only.Video Astrometrists may be interested in an ASCOM interface because they would like controlling their camera and recorder from a computer - sometimes remotely and/or unattended. Good focusing is very important and sometimes Video Astrometrists may also want to do plate-solving of selected frames while the camera is not recording so they can improve their pointing and identify the objects they want to measure.

Astronomical Video for 'Meteor Observers'

Meteor Observers usually operate unattended one or more cameras pointed statically at a part of the sky to record meteors. The detection and recording of meteors is done by specialized software that analyses the video in real-time and when a motion is detected it records a short video of the motion (meteor). The software may also do a plate solve and record in a text file the coordinates of the position where the object appeared and disappeared along with the time when this happened. While all meteors have to be timed the accuracy is usually not required to be in the order of 0.05 ms as with occultations for example, but could be in the order of 1-2 seconds.Usually Meteor Observers either use non integrating video cameras or use integrating video cameras with very short integration - 2 or max 4 frames. The high frame rate resolution is particularly important when determining the speed of the observed meteors. Why Meteor Observers may be interested in using the ASCOM interface? The main advantage would be to allow them to use a wider range of video cameras with the same software. Having said that I realize that the meteor detection software is very specialized and it may turn out that standard DirectShow interface may work better for analogue video cameras. However with the increased use of digital video cameras an ASCOM interface will become more attractive for this job.

Astronomical Video Use Cases

As we can see we have different types of observers and slightly different needs but all of those observers use video. All of them need to a different extend and with a different importance all of the following: focus the image, adjust the camera settings, display video frames on the screen, record a video file. The table below gets into more details of what is needed by each of the groups of observers we already defined:| Deep Sky Imagers | Planetary Imagers | Occultationists | Video Astrometrists | Meteor Observers | |

|---|---|---|---|---|---|

| Focusing | Essential | Essential | Essential | Essential | Optional |

| Adjusting Camera Settings | Essential | Important | Important | Important | Not Essential |

| Display Video on the Screen | Essential | Important | Important | Important | Optional |

| Record a Video File | Not Essential | Essential | Essential | Essential | Essential |

| Frame Time Accurate to 1 ms | Not Required | Optional | Essential | Essential | Optional |

| Frame Time Accurate to 1 sec | Optional | Desired | Insufficient | Insufficient | Essential |

| More Than 8 bit Image Data | Not Required | Not Required | Desired | Desired | Not Required |

| Real-time Analysis | Not Required | Not Required | Not Required | Not Required | Essential |

Next we are going to look at how different operations will likely be implemented in an IVideo driver.

Receiving Video Images: First of all to be able to do anything useful at all the driver will need to be able to obtain the actual video images for each video frame. There are generally two ways we do this - either the analogue signal from the video camera goes into a frame grabber attached to the computer and we talk to the frame grabber directly or the camera is attached directly to the computer (via USB or FireWire) and we receive data on the USB/FireWire port. This means that for the analogue video cameras the driver will actually control a frame grabber while for other cameras it will talk to the camera. Digital video cameras may also return images that have a bit depth of more than 8 bit so we cannot represent our video frames as Bitmaps and cannot use DirectShow for some of the cameras.

Because for many of our users it is important to either associate an accurate timestamp with each video frame and/or record data in more than 8 bits depth the video frame needs to contain more than just the image data. This is why we will need a special object to represent a video frame in our interface - this video frame object we will call IVideoFrame. Other typical information that each video frame will need includes the sequential frame number and additional image information that some cameras can provide such as shutter speed, white balance and others. We can think of the video frames as sequences of states including pixels (image) and other data (when and at what conditions was the image taken). It is like having a FITS file for each video frame, only FITS files are not very convenient to manipulate and it takes significant time to build them.

Controlling the Video Camera: 80% of our users will always need to record a video and 75% of them will not want to deal with recording the video on their own. This means that it is beneficial if the driver is able to record a video directly to a file. A great way to do this is if our interface provides methods for starting and stopping a video record in a file. Meteor Observers are the only group they will want to do real-time analysis and take control of the video recording process. Everyone else will want to use the video recording provided by the driver. This will actually make our driver more than just an IVideo - it will also make it a video recorder, however being able to start and stop a video record without worrying about it is an absolutely essential part of the problem we are trying to solve and this is why it is a first class citizen of our IVideo interface.

The video file format and the codecs that will be used is something that will be controlled by the driver and possibly configured from the setup dialog of the driver by the end user. For more than 8 bit videos and for the more complex video systems the file format will most likely be custom. It could even be a sequence of many FITS files if this is the choice of the driver. At this stage the purpose of the IVideo interface is to work with a camera and not with a recorded video file so there will be no methods for opening and playing back a video file. This will be done by the play back and reduction software.

To enable existing software that can already works with ICameraV2 will be able to process video images easily, we are going to use exactly the same pixel format of the video frame image - including the row and column ordering as what is currently used for the ICamera interface.

Some digital video cameras and video systems may be able to work in a single image triggered mode - which is exactly the way a CCD camera works. If those systems want to make it possible for a user to use them as CCD cameras then their driver will also implement the ICameraV2 interface separately. There are interesting questions of how to use the same camera as IVideo and ICamera at the same time and how the client software will achieve this. In regards to this a number of suggestions are made to the driver and client software developers to better handle this use case.

Adjusting Camera Settings: Video cameras may have a large number of settings. Some of them are common among cameras and others are specialized. The IVideo interface should allow all important camera settings to be controller by a client software. This will be done in two different ways - the common video settings will be adjusted directly via a corresponding property on the interface and all other more specialized settings will be adjusted using more generic action. The only common camera settings that are shared between all video cameras are Gamma and Gain. All other settings (including White Balance) are specialized and will be controlled using the standard ASCOM SupportedActions/Action interface. It was decided to make the White Balance a specialized property because there are very few cameras that actually support it. In contrast all or almost all video cameras have Gain and Gamma (even being a fixed value for Gamma) because both Gain and Gamma are important characteristics of the video signal itself.

Frame Rate and Integration: Analogue video cameras output frames with a fixed frame rate that cannot be changed while the digital video cameras can have a variable frame rate. When it comes to exposure duration there are again two types of analogue video cameras - integrating and non integrating. The integrating video cameras have the shutter open for the duration of the exposure and after the exposure is completed they output the same video frame (the result from the exposure) with their supported frame rate and for the duration of another exposure. Non integrating video cameras have a fixed exposure duration that cannot be changed. Some integrating video cameras also offer exposures shorter than the frame rate interval. In this case the shutter is only open for the corresponding exposure time and the remaining time for up to the frame rate duration the camera is not collecting light. Digital video cameras usually output a single frame for each exposure that they produce regardless of the duration of the exposure.

When it comes to changing the frame rate and/or duration of the integration interval - we need a solution that will work with all types of video devices we talked about so far. The proposal is to use the SupportedIntegrationRate property to return the list of supported integration rates and use IntegrationRate method to set a new integration rate. Some cameras however support setting a specific exposure in seconds. For those cameras the driver will need to choose what exposures will be supported and offer the limited list of those exposures. It also may offer the end user to configure the supported exposures on the Setup Dialog. Supporting only a limited set of exposures may be seen as limitation by some but considering the use cases there is no reason why this limitation will cause any serious issues. For most cameras increasing the camera exposure by a factor of 2 is the best approach to take. Many of the integrating video cameras use exactly this pattern to also offer a limited set of integration times.

Displaying Frames and Buffering: Buffering is an important part of any video stream processing. Its purpose is to ensure that random delays in the client software or the operating system will not result in a large loss of video frames. The buffer is a queue of video frames - the driver puts the newly arrived video frames at the end of the queue and gives to the client software the first frame in the queue when the next frame is requested. If the client software doesn't request a new frame for a long time and the buffer runs out of space the driver will have to start dropping frames (or run out of memory). Because all IVIdeoFrames have a sequential frame number it will be trivial for the client software to determine if there was a dropped frame. The feeding of video frames happens independently from the camera and video control and its implementation in the driver needs to be multithreaded and thread safe.

The size of the buffer is obviously an important characteristic of the driver and its best value will depend largely on the hardware that runs the video camera, the client software and what it will be doing with the frames. The buffer size needs to be a configuration parameter which is best suited for the setup dialog as it is unlikely that it will need to be changed in the same client software session.

Buffering however is not a must. Many of the valid video camera applications (in fact all applications except detecting and recording meteors) will not need to process all video frames. The need to show the video on the screen for all those use cases is related to the need to focus the camera and see how changing camera settings affects the produced image. For those applications it is perfectly fine to use a buffer size of 1 i.e. to not have a buffer at all but always receive the latest available video image at the time the client software requested it. Not having to implementing a buffer in the driver is also a simplification for its development and because of this the support for buffering in the IVideo interface is viewed as an optional choice of the driver developer.

On the other hand some client applications may require a buffered frame queue in order to operate correctly and there should be a way for them to check at runtime if the current driver supports buffering. This can be done checking the VideoFramesBufferSize property which will return a number greater than 1 if buffering is supported.

Defining the Purpose of the IVideo Interface

The proposed IVideo ASCOM Interface is designed considering the characteristics and usage of the following types of video devices:| Video Device Type | Popular Camera Models | Groups with Special Interest Using Those Cameras | Devices and Ports Controlled by the Driver |

|---|---|---|---|

| Analogue Non Integrating Camera | WAT-902H, PC164, PC164EX2 | Occultationists, Video Astrometrists, Meteor Observers | Frame Grabber (PCI or USB) |

| Analogue Integrating Camera | WAT-120N, Mintron, GSTAR, Mallincam | Deep Sky Imagers, Occultationists, Video Astrometrists, Meteor Observers | Frame Grabber (PCI or USB) and Video Camera (Serial, LPT, USB) |

| Digital Camera | Range of PointGrey cameras (including Flea3, Grasshopper Express). Range of Lumenera cameras. | Planetary Imagers, Occultationists | Video Camera (USB, FireWire) |

| Video System | ADVS | Occultationists, Video Astrometrists | Video System (USB, FireWire, Serial) |

The IVideo ASCOM interface is designed to provide a standard, device independent abstract interface for controlling an astronomical video camera to do attended or unattended astronomical video observations - by recording a video file or providing a video signal on the screen. The interface defines an abstraction from the hardware that runs the video and focuses on what the video observer needs to accomplish as part of the observations. The IVideo interface has been built for the following specific tasks:

- Instructing the camera to record a video file and save it on the file system

- Occasionally obtaining individual video frames as the camera is running in order to help with focusing, frame rate adjustments, field identification and others.

- Provide all video frames in a buffered manner that can be used to do real-time analysis (Optional - if the driver implements it)

Unique IVideo Interface Members

The IVideo interface has been heavily influenced by the current ASCOM ICameraV2 interface for controlling a CCD camera. If we ignore all members of the interface that are duplicated from the ICameraV2 we have the following list of unique video related members of the IVideo interface:t VideoCaptureDeviceName - All video cameras that output analogue video will require a frame grabber (capturing) device to connect to the computer and provide the video frames in digital format. When such a capturing device is used the VideoCaptureDeviceName will return its name or designation for information purposes - i.e. so the end user knows which capturing device is currently in use. In order to receive video image from analogue video cameras the driver will have to talk to the capturing device rather than the camera itself.

ExposureMin, ExposureMax - The minimum and maximum supported exposure (integration time) that the camera supports in seconds.

FrameRate - The frame reate at which the camera is running. This can be either PAL, NTSC or Variable. The frame rate is used for information. To change the exposure/integration of the camera the IntegrationRate method should be used.

SupportedIntegrationRate - List of exposure rates (integration times) supported by the video camera.

IntegrationRate - Index into the SupportedIntegrationRate array for the selected camera integration rate.

LastVideoFrame, LastVideoFrameImageArrayVariant - Returns the last/current IVideoFrame or the IVideoFrame at the front of the queue when buffering is used.

VideoFramesBufferSize - The size of the video frames buffer or 1 if no buffering is used.

BitDepth - The bit depth of the images that the camera can produce. For all analogue cameras this will be 8 bit while digital cameras usually can support more (12bit or 16 bit). VideoFileFormat - The file format of the recorded video file, e.g. AVI, MPEG, ADV etc. VideoCodec - The video codec used to record the video file, e.g. XVID, DVSD, YUY2, HFYU etc. Gains - List of gain values supported by the camera. Gain - Gets or sets the selected camera gain GainMax, GainMin - The minimum and maximum value for the Gain.

Gammas - The list of Gamma values supported by the video camera.

Gamma - Gets or sets the selected camera Gamma StartRecordingVideoFile - Instructs the driver to start recording a file. StopRecordingVideoFile - Stops the recording of the current file.

The IVideoFrame Interface

ImageArray - Video frame pixels.ImageArrayVariant - Video frame pixels as variant.

FrameNumber - The current video frame number.

ExposureDuration - The exposure duration in seconds.

ImageInfo - A generic property that contains any additional information about the video frame that the driver can provide. For example when the video system can also time accurately the start of the exposure, the start of the exposure can be returned here along with other properties.

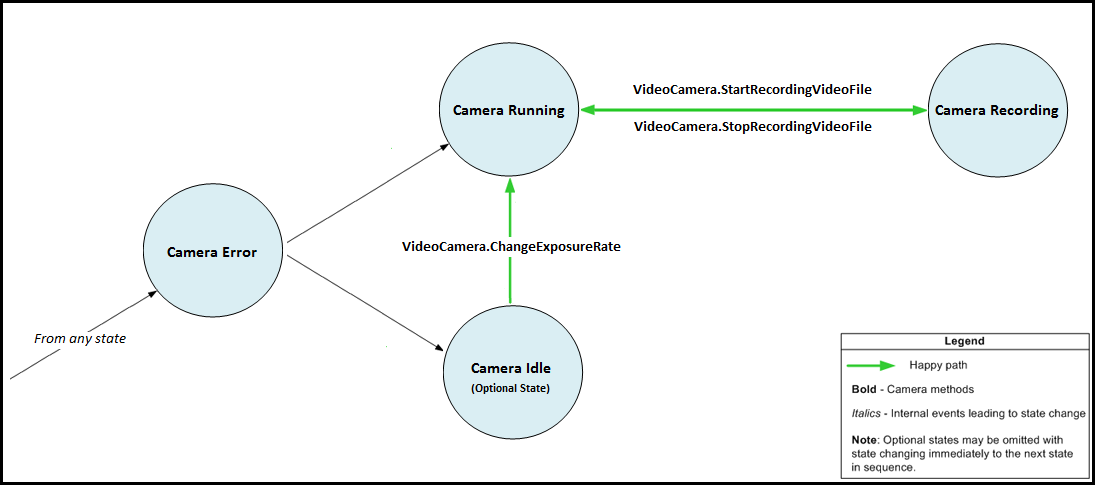

The IVideo State

In video cameras the process of acquiring a video frame is generally atomic. Some digital cameras may be an exception but for all other video cameras the video frames simply become available and the client software cannot cancel a single exposure while it is being produced. Because of this the states of a video camera (IVideo) will be very different than the states of a CCD camera (ICameraV2). While for a CCD camera the state is all about the sub processes involved in exposing and reading an image, for a video camera it is about whether video signal is available and whether the system is recording it. As mentioned earlier the IVideo interface is more than just describing the video camera hardware, it is also about controlling the video grabber and the process of recording a video file. The following states are proposed to be used for the IVideo.CameraState property:

Thanks to Peter Simpson for his ICameraV2 diagram which I used to create the graphics and the legend for the IVideo diagram.

Using a Video Camera as a CCD Camera

Almost all digital video cameras allow both taking single exposures and running in a video mode. The single exposures, also called triggered exposures, allow those digital video cameras to be used as a CCD camera. Instead of adding a bunch of new members to the IVideo interface to work with the single triggered exposure mode, it is much better if those cameras simply implement the ICameraV2 interface if they indeed can work as a CCD camera.While this is a good solution that avoids cluttering the IVideo interface it also means that the same device can work in two different modes and that will cause errors if both interfaces are used at the same time. After all we can either use the system as a video camera or we can use it as a CCD camera but not both at the same time.

When trying to connect to the ICameraV2 interface while the IVideo interface is already connected ideally we would like to get a connection error saying that someone else has already connected to the camera. If this is not possible then we would expect the connection to succeed but to put the IVideo in errored state. From the IVideo points of view the video frame flow may have stopped for unknown reason (the reason being the ICameraV2 interface connected and set the camera to manually triggered exposure mode). So this is an error condition which will change the state of the IVideo to Errored.

Comparison with ICameraV2

At present the following comments discuss the commonalities and differences and give reasons why some properties from ICameraV2 were used/not used in the IVideo interface: 1) Current video cameras are not that sophisticated to support transmission of string commands to the devices directly. Because of this the CommandBlind(), CommandBool() and CommandString() methods are not included in the interface. Note that the available SupportedActions/Action members can be used to achieve similar results. 2) Controlling the exposure of an individual frame is done using the ChangeFrameExposure() method passing one of the fixed exposure rates returned by the SupportedIntegrationRate property. Video cameras exposure is controlled by both a frame rate and integration time. All analogue cameras have a fixed frame rate (that corresponds to the PAL or NTCS standards) and allow the exposure to be controlled via the integration time. Digital video cameras however may support a range of native frame rates and don't have the concept of integration time as they only output one frame for each exposure. To avoid confusion and to allow for the support of both analogue and digital video cameras a new term "ExposureRate" has been defined. For analogue video cameras the ExposureRate is the integration time or exposure duration (when exposing for less of the duration of 1 frame) and for digital video cameras the ExposureRate has the meaning of the exposure duration of the current frame rate. 3) This is a list of ICameraV2 members that have not been used in the IVideo interface because they are either currently not supported by any of the video cameras or are not very useful for video. In a case some of those become wanted for a video camera then the SupportedActions/Action members could be used to emulate them.- StartExposure(), StopExposure() and AbortExposure() - There are no individual exposures in video cameras

- ImageReady - There is no concept of exposure and the last frame is always 'ready'. Use the IVideoFrame.FrameNumber property to determine which is the current frame

- LastExposureDuration and LastExposureStartTime - They are now part of IVideoFrame

- ExposureResolution - The concept has been removed because the camera supports a fixed list of exposure rates (returned by the SupportedIntegrationRate property)

- NumX, NumY, StartX, StartY, MaxBinX, MaxBinY, BinX, BinY - no sub-framing and binning is supported for video cameras for simplicity.

- CanAbortExposure, CanAsymmetricBin, CanGetCoolerPower, CanSetCCDTemperature, CanStopExposure, CCDTemperature, CoolerOn, CoolerPower, HasShutter, HeatSinkTemperature - Not supported (irrelevant or removed for simplicity)

- SetCCDTemperature, BayerOffsetX, BayerOffsetY, CanFastReadout, FastReadout, PercentCompleted, ReadoutMode, ReadoutModes - Not supported (irrelevant or removed for simplicity)

- MaxADU, ElectronsPerADU, FullWellCapacity - Not supported, to determine the maximum ADU the BitDepth property should be used that returns number of bits per pixel

FAQ about IVideo and DirectShow

How does IVideo compare to DirectShow? - IVideo is a higher layer abstraction. DirectShow typically works with 8 bit analogue video cameras and has been built to make video clips and movies work faster focusing on user enjoyment while watching the films. IVideo is built as a single interface for all types of video devices including 12 bit and 14 bit digital video cameras and focuses on the astronomical value of video which includes for example the preserving of the original CCD well values which leads to a better photometry.In addition the IVideo includes a lot more information into every video frame - something that DirectShow cannot do. This information may include a very precise GPS timestamps, Gamma, Gain, Offset and other parameters for the video frame important for astronomers that are returned by many digital video cameras. For non integrating analogue video cameras none of this information will be probably available, however cameras such as Mintron and MallinCam allow computer control of the cameras and can indeed return a lot more information in the video frame.

How does IVideo handle codecs - Codecs are independent from IVideo. The properties on the IVideo interface include a codec and file format for information purposes only i.e. this is what the driver is configured to use. The driver may allow the codec to be changed from the setup dialog and it will have to handle all codec issues which leaves IVideo independent of codecs. A client software that uses IVideo to render video frames will thus not have to worry about codecs.

How is colour handled? - The frame returned by IVideo is an array of integers and has exactly the same format as an image from a CCD camera. Colour cameras may return the pixels as a Bayer Matrix or a R,G and B "planes". A client software will need to render the video frames using the array of integer pixels and can do exactly what it currently does to display an ICamera image. The open source ASCOM Video Client software demonstrates one way in which this could be achieved. It is possible that the ASCOM Utilities will offer methods for fast convertion of ImageArray pixels into colour bitmaps that can be displayed directly and used by client software.

Will a DirectShow IVideo driver be available out of the box? - Yes a Video Capture driver will be available out of the box and will be released with the IVideo interface. The driver will use DirectShow to capture the video. This driver will be able to work with any video camera that could be used on Windows via DirectShow. The driver will be limited to only capturing and recording the video and will not allow the camera to be controlled. A specialized driver wil need to be build for cameras that provide analogue video but also allow direct control from a computer. We are exploring a possibility of allowing the Video Capture driver to be "extended" by such specialized drivers so they will only need to implement the camera control code and can get all the capturing code out of the box.

Back to top

If you have any suggestions or comments please either contact me directly or even better be part of the ASCOM standard for Video Camera discussions on the ASCOM Yahoo Group - ASCOM-Talk

Hristo Pavlov

Ver 1 - 28 April 2012

Ver 2 - 23 June 2012

Ver 3 - 03 Nov 2012

Ver 4 - 19 Nov 2012 (Provisional SDK Ver 1)

Ver 5 - 24 Jan 2014 (Removed provisional SDK download links)